Update README.md

Browse files

README.md

CHANGED

|

@@ -7,10 +7,135 @@ tags:

|

|

| 7 |

- merge

|

| 8 |

|

| 9 |

---

|

| 10 |

-

# Azalea-v0

|

| 11 |

|

| 12 |

|

| 13 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 15 |

|

| 16 |

## Merge Details

|

|

|

|

| 7 |

- merge

|

| 8 |

|

| 9 |

---

|

| 10 |

+

# trashpanda-org/Qwen2.5-72B-Azalea-v0

|

| 11 |

|

| 12 |

|

| 13 |

|

| 14 |

+

## Recommended settings

|

| 15 |

+

|

| 16 |

+

<p><b>Context/instruct template</b>: ChatML.</p>

|

| 17 |

+

|

| 18 |

+

<p><b>Samplers</b>: temperature at 0.9, min_p at 0.05, top_a at 0.3, TFS at 0.75, repetition_penalty at 1.03, DRY if you have access to it.</p>

|

| 19 |

+

|

| 20 |

+

A virt-io derivative prompt worked best during our testing, but feel free to use what you like.

|

| 21 |

+

|

| 22 |

+

## Thank you!

|

| 23 |

+

|

| 24 |

+

Big thanks to the folks in the trashpanda-org Discord server for testing and sending over some logs!

|

| 25 |

+

|

| 26 |

+

## Reviews

|

| 27 |

+

|

| 28 |

+

> ok so i've tried a few different bots with Azalea, and it's pretty freaking good. it stays really true to the characters and their personalities. as for impersonating - i haven't had that issue so far

|

| 29 |

+

|

| 30 |

+

— Shreksophoner

|

| 31 |

+

|

| 32 |

+

> It can definitely get filthy, but it's impersonating {{user}} every once in a while.

|

| 33 |

+

>

|

| 34 |

+

> Reasoning seems to work well? I'm currently just running off of the recommended sampler for Snowdrop and lowering the temp every few responses.

|

| 35 |

+

|

| 36 |

+

— Ema

|

| 37 |

+

|

| 38 |

+

> Really liking the model, it's def up there with Snowdrop v0.

|

| 39 |

+

>

|

| 40 |

+

> It's able to handle side chars really well, even between rerolls, feeling like it's not just a fluke that side chars are integrated.

|

| 41 |

+

>

|

| 42 |

+

> Rerolls vary, which is good. Really loving the prose.

|

| 43 |

+

>

|

| 44 |

+

> Thinking kinda weird since it's often hallucinating giving itself its own direction, but the end result still good, so I suppose it's not really a problem.

|

| 45 |

+

>

|

| 46 |

+

> There's little to no slops, when there's some, it's really minor that I wouldn't really mind it.

|

| 47 |

+

>

|

| 48 |

+

> It's yappy at times (sometimes you'd need more than 1k output), but I'd say even when it's yapping, it's a good yap.

|

| 49 |

+

>

|

| 50 |

+

> Not as horny as in it'd jump on you, but it definitely teases. I'd say it's good, actually prefer this way.

|

| 51 |

+

>

|

| 52 |

+

> There's no positivity bias for sure, which is a 👍

|

| 53 |

+

>

|

| 54 |

+

> It's definitely smart, understanding my reply really well, at least in its final response, not sure wassup with some hallucination on the thinking as shown on the 4-5th images tho.

|

| 55 |

+

|

| 56 |

+

— Raihanbook

|

| 57 |

+

|

| 58 |

+

> Too much "heaven and earth" slop, impersonation from time to time. Good with NSFW in general but rushes. Long answers with good narrative, V3-like vibe, a bit positivity bias maybe? Rushes events.

|

| 59 |

+

>

|

| 60 |

+

> Loved the fluff, sugar daddy smut was too soft. NSFW actions good but rushes to the end. Dead dove is unplayable. 6/10

|

| 61 |

+

|

| 62 |

+

— Carmenta

|

| 63 |

+

|

| 64 |

+

> The model is unhinged and horny (a positive for me), it suffers issues from Qwen's quirk of being prone to impersonation like most Qwen 72B models amd still have some slops here and there.

|

| 65 |

+

>

|

| 66 |

+

> However, that was under the circumstance of traditional samplers. idk, I haven't played with nsigma much, but I feel like it's refreshing. The prose improved so much and no impersonation across 10 swipes

|

| 67 |

+

>

|

| 68 |

+

> I'll say with nsigma this model is 10/10 for me. But I'll want to use it on Featherless, which doesn't support such parameters. I am going to test a bit more with traditional samplers and leave more feedback while it is still up.

|

| 69 |

+

>

|

| 70 |

+

> PS Tested this model after some claude/gemini log, so far no impersonation like my previous test did on a fresh chat

|

| 71 |

+

|

| 72 |

+

— OMGWTFBBQ

|

| 73 |

+

|

| 74 |

+

> I've noticed that just like any LLMs would it seems to have a certain habit or sometimes repeating what it said, or rerolling but still it is similar to the previous responses on lower temp which is about 0.7-0.8 but on high temps such as 1.18 or 1 when its creative it seems to struggle with the consistency, which is length and context of response, not sure if that has anything to do with temps however.

|

| 75 |

+

>

|

| 76 |

+

> As a non thinking model I am really impressed by its capabilities to generate responses that has high quality despite sometimes it started going onto its own merry way of repeating what it said (Not sure what the issue is but it does repeat pretty often even on high temp, not the full sentence but it'll be like probably the end part of a sentence will be the same as the first reroll?)

|

| 77 |

+

>

|

| 78 |

+

> It follow prompts pretty well so that's a pos too!

|

| 79 |

+

>

|

| 80 |

+

> It seems to not understand how to push the story onwards without the character leaving the scene if its response length was too long.

|

| 81 |

+

>

|

| 82 |

+

> There is certain times when it's tweaking a bit but hey, what LLM won't tweak right :thumbsmile:

|

| 83 |

+

>

|

| 84 |

+

> if Aza has a slightly better consistency in good quality responses I think it would be perfect, I enjoyed my roleplay with it otherwise!

|

| 85 |

+

|

| 86 |

+

— Sprout

|

| 87 |

+

|

| 88 |

+

> i feel like Azalea is actually pretty great

|

| 89 |

+

>

|

| 90 |

+

> ok so from my further testing, it seems like it's either i get a perfect response within the first message, or i have to resend like 5 times to get another one of similar quality

|

| 91 |

+

|

| 92 |

+

— Shreksophoner

|

| 93 |

+

|

| 94 |

+

> The first few responses were really good, but after a few rerolls the llm does seem to struggle with following a set structure. Content-wise, it was decent, left me some room to advance the story. Creativity wasn't anything mind-blowing, but i still think it did a decent job. Prose was creative too.

|

| 95 |

+

>

|

| 96 |

+

> The quality of responses seems to be whats holding it back. Everything else was decent to good for me

|

| 97 |

+

|

| 98 |

+

— simon

|

| 99 |

+

|

| 100 |

+

> Can maintain subtlety about things from nudges. I like it. Reasoning, even if it's not Snowdrop, keeps track of secrets and what char/user knows, it's sick. Comes up with nice little details now and again that's not in the card. Speech patterns take hold, character portrayal is on point most of the time, dialogue is decent to good. Prose is generally better than I remember Snowdrop had at times, worse in others, situational.

|

| 101 |

+

>

|

| 102 |

+

> Without reasoning: It's fine, but narration seems to suffer compared to with-reasoning responses.

|

| 103 |

+

>

|

| 104 |

+

> Writes more diverse exposition for smut than any Marigold ever did, interesting. I've noticed it can be more horny for thinking responses.

|

| 105 |

+

>

|

| 106 |

+

> Drawbacks, however: tested it with a Spanish-speaking bot that Snowdrop does well with, and it's not interspersing Spanish in its responses like I'd expect it to. Other testers (and in my own testing), POV switch and user impersonation happens rarely. Tested with regular Qwen samplers for the most part - top nsigma didn't do well this time.

|

| 107 |

+

>

|

| 108 |

+

> Overall, did pretty well in my testing.

|

| 109 |

+

|

| 110 |

+

— Severian

|

| 111 |

+

|

| 112 |

+

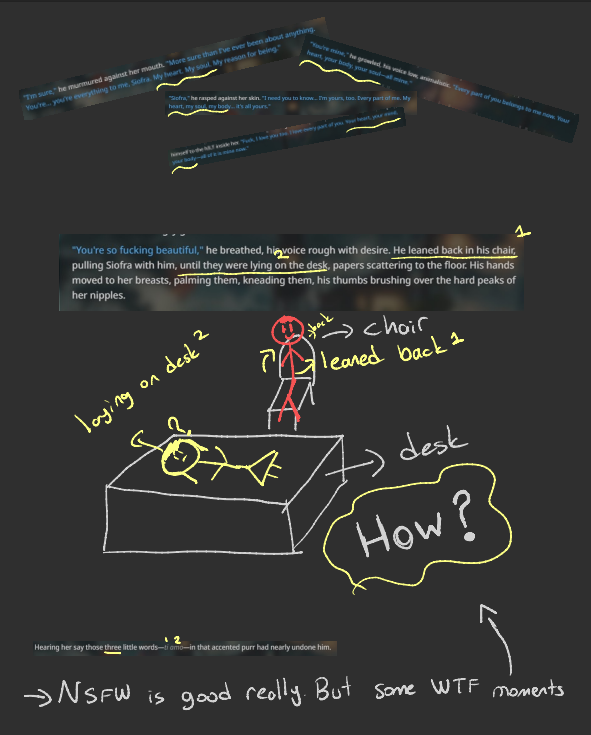

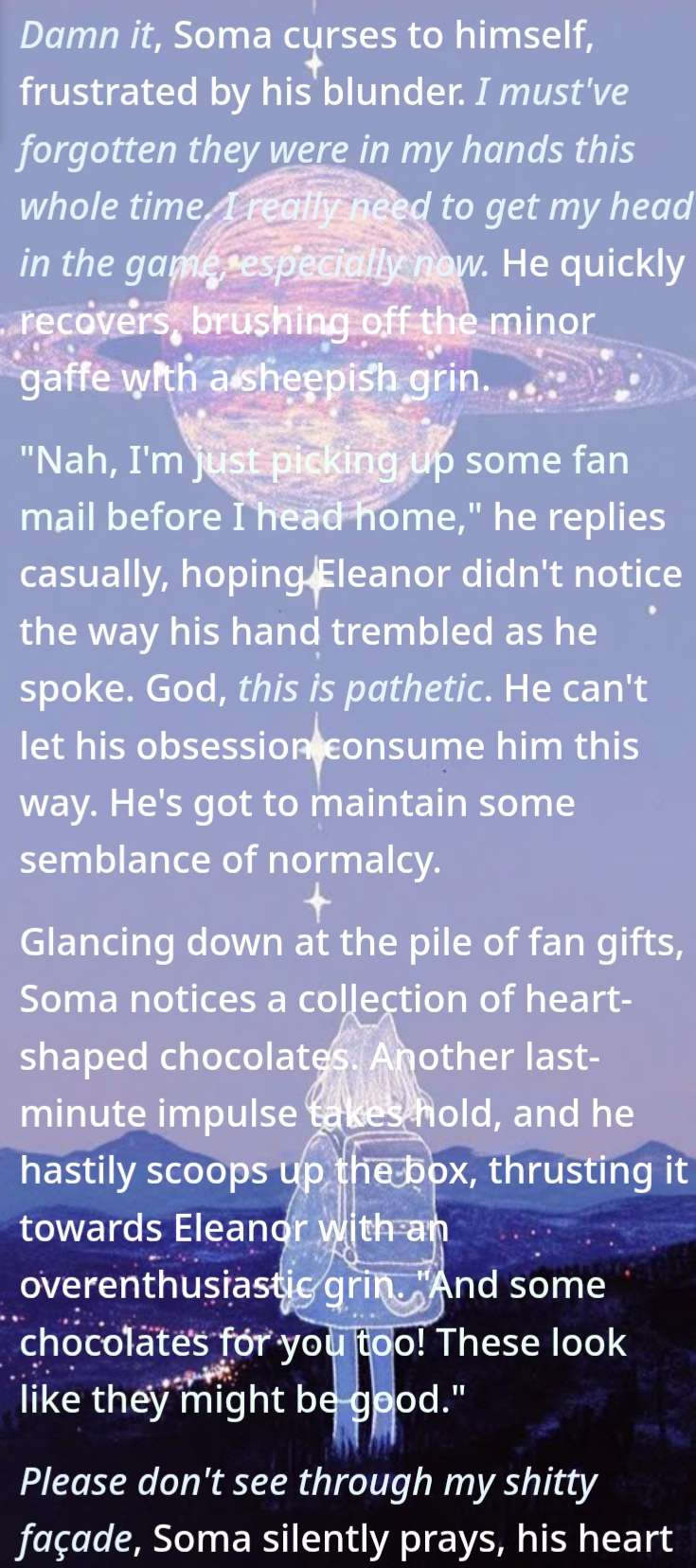

## Some logs

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

|

| 139 |

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 140 |

|

| 141 |

## Merge Details

|