Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .dockerignore +6 -0

- .github/ISSUE_TEMPLATE/bug_report.yml +146 -0

- .github/ISSUE_TEMPLATE/feature_request.yml +53 -0

- .github/workflows/docker.yml +46 -0

- .github/workflows/ghcr.yml +60 -0

- .github/workflows/issue-translator.yml +15 -0

- .github/workflows/sync.yml +39 -0

- .gitignore +45 -0

- .node-version +1 -0

- .vscode/settings.json +7 -0

- Dockerfile +44 -0

- LICENSE +21 -0

- README.md +459 -0

- components.json +21 -0

- docker-compose.yml +12 -0

- docs/How-to-deploy-to-Cloudflare-Pages.md +14 -0

- docs/deep-research-api-doc.md +332 -0

- env.tpl +101 -0

- eslint.config.mjs +21 -0

- next.config.ts +158 -0

- package.json +114 -0

- pnpm-lock.yaml +0 -0

- postcss.config.mjs +7 -0

- public/logo.png +0 -0

- public/logo.svg +14 -0

- public/screenshots/main-interface.png +0 -0

- public/scripts/eruda.min.js +0 -0

- public/scripts/pdf.worker.min.mjs +0 -0

- src/app/api/ai/anthropic/[...slug]/route.ts +55 -0

- src/app/api/ai/azure/[...slug]/route.ts +56 -0

- src/app/api/ai/deepseek/[...slug]/route.ts +53 -0

- src/app/api/ai/google/[...slug]/route.ts +57 -0

- src/app/api/ai/mistral/[...slug]/route.ts +52 -0

- src/app/api/ai/ollama/[...slug]/route.ts +51 -0

- src/app/api/ai/openai/[...slug]/route.ts +52 -0

- src/app/api/ai/openaicompatible/[...slug]/route.ts +51 -0

- src/app/api/ai/openrouter/[...slug]/route.ts +54 -0

- src/app/api/ai/pollinations/[...slug]/route.ts +52 -0

- src/app/api/ai/xai/[...slug]/route.ts +52 -0

- src/app/api/crawler/route.ts +36 -0

- src/app/api/mcp/[...slug]/route.ts +91 -0

- src/app/api/mcp/route.ts +50 -0

- src/app/api/mcp/server.ts +404 -0

- src/app/api/search/bocha/[...slug]/route.ts +47 -0

- src/app/api/search/exa/[...slug]/route.ts +47 -0

- src/app/api/search/firecrawl/[...slug]/route.ts +48 -0

- src/app/api/search/searxng/[...slug]/route.ts +49 -0

- src/app/api/search/tavily/[...slug]/route.ts +47 -0

- src/app/api/sse/live/route.ts +105 -0

- src/app/api/sse/route.ts +111 -0

.dockerignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.dockerignore

|

| 2 |

+

node_modules

|

| 3 |

+

README.md

|

| 4 |

+

.next

|

| 5 |

+

.git

|

| 6 |

+

out

|

.github/ISSUE_TEMPLATE/bug_report.yml

ADDED

|

@@ -0,0 +1,146 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Bug report

|

| 2 |

+

description: Create a report to help us improve

|

| 3 |

+

title: "[Bug]: "

|

| 4 |

+

labels: ["bug"]

|

| 5 |

+

|

| 6 |

+

body:

|

| 7 |

+

- type: markdown

|

| 8 |

+

attributes:

|

| 9 |

+

value: "## Describe the bug"

|

| 10 |

+

- type: textarea

|

| 11 |

+

id: bug-description

|

| 12 |

+

attributes:

|

| 13 |

+

label: "Bug Description"

|

| 14 |

+

description: "A clear and concise description of what the bug is."

|

| 15 |

+

placeholder: "Explain the bug..."

|

| 16 |

+

validations:

|

| 17 |

+

required: true

|

| 18 |

+

|

| 19 |

+

- type: markdown

|

| 20 |

+

attributes:

|

| 21 |

+

value: "## To Reproduce"

|

| 22 |

+

- type: textarea

|

| 23 |

+

id: steps-to-reproduce

|

| 24 |

+

attributes:

|

| 25 |

+

label: "Steps to Reproduce"

|

| 26 |

+

description: "Steps to reproduce the behavior:"

|

| 27 |

+

placeholder: |

|

| 28 |

+

1. Go to '...'

|

| 29 |

+

2. Click on '....'

|

| 30 |

+

3. Scroll down to '....'

|

| 31 |

+

4. See error

|

| 32 |

+

validations:

|

| 33 |

+

required: true

|

| 34 |

+

|

| 35 |

+

- type: markdown

|

| 36 |

+

attributes:

|

| 37 |

+

value: "## Expected behavior"

|

| 38 |

+

- type: textarea

|

| 39 |

+

id: expected-behavior

|

| 40 |

+

attributes:

|

| 41 |

+

label: "Expected Behavior"

|

| 42 |

+

description: "A clear and concise description of what you expected to happen."

|

| 43 |

+

placeholder: "Describe what you expected to happen..."

|

| 44 |

+

validations:

|

| 45 |

+

required: true

|

| 46 |

+

|

| 47 |

+

- type: markdown

|

| 48 |

+

attributes:

|

| 49 |

+

value: "## Screenshots"

|

| 50 |

+

- type: textarea

|

| 51 |

+

id: screenshots

|

| 52 |

+

attributes:

|

| 53 |

+

label: "Screenshots"

|

| 54 |

+

description: "If applicable, add screenshots to help explain your problem."

|

| 55 |

+

placeholder: "Paste your screenshots here or write 'N/A' if not applicable..."

|

| 56 |

+

validations:

|

| 57 |

+

required: false

|

| 58 |

+

|

| 59 |

+

- type: markdown

|

| 60 |

+

attributes:

|

| 61 |

+

value: "## Deployment"

|

| 62 |

+

- type: checkboxes

|

| 63 |

+

id: deployment

|

| 64 |

+

attributes:

|

| 65 |

+

label: "Deployment Method"

|

| 66 |

+

description: "Please select the deployment method you are using."

|

| 67 |

+

options:

|

| 68 |

+

- label: "Docker"

|

| 69 |

+

- label: "Vercel"

|

| 70 |

+

- label: "Server"

|

| 71 |

+

|

| 72 |

+

- type: markdown

|

| 73 |

+

attributes:

|

| 74 |

+

value: "## Desktop (please complete the following information):"

|

| 75 |

+

- type: input

|

| 76 |

+

id: desktop-os

|

| 77 |

+

attributes:

|

| 78 |

+

label: "Desktop OS"

|

| 79 |

+

description: "Your desktop operating system."

|

| 80 |

+

placeholder: "e.g., Windows 10"

|

| 81 |

+

validations:

|

| 82 |

+

required: false

|

| 83 |

+

- type: input

|

| 84 |

+

id: desktop-browser

|

| 85 |

+

attributes:

|

| 86 |

+

label: "Desktop Browser"

|

| 87 |

+

description: "Your desktop browser."

|

| 88 |

+

placeholder: "e.g., Chrome, Safari"

|

| 89 |

+

validations:

|

| 90 |

+

required: false

|

| 91 |

+

- type: input

|

| 92 |

+

id: desktop-version

|

| 93 |

+

attributes:

|

| 94 |

+

label: "Desktop Browser Version"

|

| 95 |

+

description: "Version of your desktop browser."

|

| 96 |

+

placeholder: "e.g., 89.0"

|

| 97 |

+

validations:

|

| 98 |

+

required: false

|

| 99 |

+

|

| 100 |

+

- type: markdown

|

| 101 |

+

attributes:

|

| 102 |

+

value: "## Smartphone (please complete the following information):"

|

| 103 |

+

- type: input

|

| 104 |

+

id: smartphone-device

|

| 105 |

+

attributes:

|

| 106 |

+

label: "Smartphone Device"

|

| 107 |

+

description: "Your smartphone device."

|

| 108 |

+

placeholder: "e.g., iPhone X"

|

| 109 |

+

validations:

|

| 110 |

+

required: false

|

| 111 |

+

- type: input

|

| 112 |

+

id: smartphone-os

|

| 113 |

+

attributes:

|

| 114 |

+

label: "Smartphone OS"

|

| 115 |

+

description: "Your smartphone operating system."

|

| 116 |

+

placeholder: "e.g., iOS 14.4"

|

| 117 |

+

validations:

|

| 118 |

+

required: false

|

| 119 |

+

- type: input

|

| 120 |

+

id: smartphone-browser

|

| 121 |

+

attributes:

|

| 122 |

+

label: "Smartphone Browser"

|

| 123 |

+

description: "Your smartphone browser."

|

| 124 |

+

placeholder: "e.g., Safari"

|

| 125 |

+

validations:

|

| 126 |

+

required: false

|

| 127 |

+

- type: input

|

| 128 |

+

id: smartphone-version

|

| 129 |

+

attributes:

|

| 130 |

+

label: "Smartphone Browser Version"

|

| 131 |

+

description: "Version of your smartphone browser."

|

| 132 |

+

placeholder: "e.g., 14"

|

| 133 |

+

validations:

|

| 134 |

+

required: false

|

| 135 |

+

|

| 136 |

+

- type: markdown

|

| 137 |

+

attributes:

|

| 138 |

+

value: "## Additional Logs"

|

| 139 |

+

- type: textarea

|

| 140 |

+

id: additional-logs

|

| 141 |

+

attributes:

|

| 142 |

+

label: "Additional Logs"

|

| 143 |

+

description: "Add any logs about the problem here."

|

| 144 |

+

placeholder: "Paste any relevant logs here..."

|

| 145 |

+

validations:

|

| 146 |

+

required: false

|

.github/ISSUE_TEMPLATE/feature_request.yml

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Feature request

|

| 2 |

+

description: Suggest an idea for this project

|

| 3 |

+

title: "[Feature Request]: "

|

| 4 |

+

labels: ["feature"]

|

| 5 |

+

|

| 6 |

+

body:

|

| 7 |

+

- type: markdown

|

| 8 |

+

attributes:

|

| 9 |

+

value: "## Is your feature request related to a problem? Please describe."

|

| 10 |

+

- type: textarea

|

| 11 |

+

id: problem-description

|

| 12 |

+

attributes:

|

| 13 |

+

label: Problem Description

|

| 14 |

+

description: "A clear and concise description of what the problem is. Example: I'm always frustrated when [...]"

|

| 15 |

+

placeholder: "Explain the problem you are facing..."

|

| 16 |

+

validations:

|

| 17 |

+

required: true

|

| 18 |

+

|

| 19 |

+

- type: markdown

|

| 20 |

+

attributes:

|

| 21 |

+

value: "## Describe the solution you'd like"

|

| 22 |

+

- type: textarea

|

| 23 |

+

id: desired-solution

|

| 24 |

+

attributes:

|

| 25 |

+

label: Solution Description

|

| 26 |

+

description: A clear and concise description of what you want to happen.

|

| 27 |

+

placeholder: "Describe the solution you'd like..."

|

| 28 |

+

validations:

|

| 29 |

+

required: true

|

| 30 |

+

|

| 31 |

+

- type: markdown

|

| 32 |

+

attributes:

|

| 33 |

+

value: "## Describe alternatives you've considered"

|

| 34 |

+

- type: textarea

|

| 35 |

+

id: alternatives-considered

|

| 36 |

+

attributes:

|

| 37 |

+

label: Alternatives Considered

|

| 38 |

+

description: A clear and concise description of any alternative solutions or features you've considered.

|

| 39 |

+

placeholder: "Describe any alternative solutions or features you've considered..."

|

| 40 |

+

validations:

|

| 41 |

+

required: false

|

| 42 |

+

|

| 43 |

+

- type: markdown

|

| 44 |

+

attributes:

|

| 45 |

+

value: "## Additional context"

|

| 46 |

+

- type: textarea

|

| 47 |

+

id: additional-context

|

| 48 |

+

attributes:

|

| 49 |

+

label: Additional Context

|

| 50 |

+

description: Add any other context or screenshots about the feature request here.

|

| 51 |

+

placeholder: "Add any other context or screenshots about the feature request here..."

|

| 52 |

+

validations:

|

| 53 |

+

required: false

|

.github/workflows/docker.yml

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Publish Docker image

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

tags:

|

| 6 |

+

# Push events matching v*, such as v1.0, v20.15.10, etc. to trigger workflows

|

| 7 |

+

- "v*"

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

push_to_registry:

|

| 11 |

+

name: Push Docker image to Docker Hub

|

| 12 |

+

runs-on: ubuntu-latest

|

| 13 |

+

steps:

|

| 14 |

+

- name: Check out the repo

|

| 15 |

+

uses: actions/checkout@v3

|

| 16 |

+

- name: Log in to Docker Hub

|

| 17 |

+

uses: docker/login-action@v2

|

| 18 |

+

with:

|

| 19 |

+

username: ${{ secrets.DOCKER_USERNAME }}

|

| 20 |

+

password: ${{ secrets.DOCKER_PASSWORD }}

|

| 21 |

+

|

| 22 |

+

- name: Extract metadata (tags, labels) for Docker

|

| 23 |

+

id: meta

|

| 24 |

+

uses: docker/metadata-action@v4

|

| 25 |

+

with:

|

| 26 |

+

images: xiangfa/deep-research

|

| 27 |

+

tags: |

|

| 28 |

+

type=raw,value=latest

|

| 29 |

+

type=ref,event=tag

|

| 30 |

+

|

| 31 |

+

- name: Set up QEMU

|

| 32 |

+

uses: docker/setup-qemu-action@v2

|

| 33 |

+

|

| 34 |

+

- name: Set up Docker Buildx

|

| 35 |

+

uses: docker/setup-buildx-action@v2

|

| 36 |

+

|

| 37 |

+

- name: Build and push Docker image

|

| 38 |

+

uses: docker/build-push-action@v4

|

| 39 |

+

with:

|

| 40 |

+

context: .

|

| 41 |

+

platforms: linux/amd64,linux/arm64

|

| 42 |

+

push: true

|

| 43 |

+

tags: ${{ steps.meta.outputs.tags }}

|

| 44 |

+

labels: ${{ steps.meta.outputs.labels }}

|

| 45 |

+

cache-from: type=gha

|

| 46 |

+

cache-to: type=gha,mode=max

|

.github/workflows/ghcr.yml

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Create and publish a Docker image

|

| 2 |

+

|

| 3 |

+

# Configures this workflow to run every time a change is pushed to the branch called `release`.

|

| 4 |

+

on:

|

| 5 |

+

push:

|

| 6 |

+

tags:

|

| 7 |

+

# Push events matching v*, such as v1.0, v20.15.10, etc. to trigger workflows

|

| 8 |

+

- "v*"

|

| 9 |

+

|

| 10 |

+

# Defines two custom environment variables for the workflow. These are used for the Container registry domain, and a name for the Docker image that this workflow builds.

|

| 11 |

+

env:

|

| 12 |

+

REGISTRY: ghcr.io

|

| 13 |

+

IMAGE_NAME: ${{ github.repository }}

|

| 14 |

+

|

| 15 |

+

# There is a single job in this workflow. It's configured to run on the latest available version of Ubuntu.

|

| 16 |

+

jobs:

|

| 17 |

+

build-and-push-image:

|

| 18 |

+

runs-on: ubuntu-latest

|

| 19 |

+

# Sets the permissions granted to the `GITHUB_TOKEN` for the actions in this job.

|

| 20 |

+

permissions:

|

| 21 |

+

contents: read

|

| 22 |

+

packages: write

|

| 23 |

+

attestations: write

|

| 24 |

+

id-token: write

|

| 25 |

+

#

|

| 26 |

+

steps:

|

| 27 |

+

- name: Checkout repository

|

| 28 |

+

uses: actions/checkout@v4

|

| 29 |

+

# Uses the `docker/login-action` action to log in to the Container registry registry using the account and password that will publish the packages. Once published, the packages are scoped to the account defined here.

|

| 30 |

+

- name: Log in to the Container registry

|

| 31 |

+

uses: docker/login-action@v2

|

| 32 |

+

with:

|

| 33 |

+

registry: ${{ env.REGISTRY }}

|

| 34 |

+

username: ${{ github.actor }}

|

| 35 |

+

password: ${{ secrets.GITHUB_TOKEN }}

|

| 36 |

+

# This step uses [docker/metadata-action](https://github.com/docker/metadata-action#about) to extract tags and labels that will be applied to the specified image. The `id` "meta" allows the output of this step to be referenced in a subsequent step. The `images` value provides the base name for the tags and labels.

|

| 37 |

+

- name: Extract metadata (tags, labels) for Docker

|

| 38 |

+

id: meta

|

| 39 |

+

uses: docker/metadata-action@v5

|

| 40 |

+

with:

|

| 41 |

+

images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

|

| 42 |

+

# This step uses the `docker/build-push-action` action to build the image, based on your repository's `Dockerfile`. If the build succeeds, it pushes the image to GitHub Packages.

|

| 43 |

+

# It uses the `context` parameter to define the build's context as the set of files located in the specified path. For more information, see [Usage](https://github.com/docker/build-push-action#usage) in the README of the `docker/build-push-action` repository.

|

| 44 |

+

# It uses the `tags` and `labels` parameters to tag and label the image with the output from the "meta" step.

|

| 45 |

+

- name: Build and push Docker image

|

| 46 |

+

id: push

|

| 47 |

+

uses: docker/build-push-action@v4

|

| 48 |

+

with:

|

| 49 |

+

context: .

|

| 50 |

+

push: true

|

| 51 |

+

tags: ${{ steps.meta.outputs.tags }}

|

| 52 |

+

labels: ${{ steps.meta.outputs.labels }}

|

| 53 |

+

|

| 54 |

+

# This step generates an artifact attestation for the image, which is an unforgeable statement about where and how it was built. It increases supply chain security for people who consume the image. For more information, see [Using artifact attestations to establish provenance for builds](/actions/security-guides/using-artifact-attestations-to-establish-provenance-for-builds).

|

| 55 |

+

- name: Generate artifact attestation

|

| 56 |

+

uses: actions/attest-build-provenance@v2

|

| 57 |

+

with:

|

| 58 |

+

subject-name: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME}}

|

| 59 |

+

subject-digest: ${{ steps.push.outputs.digest }}

|

| 60 |

+

push-to-registry: true

|

.github/workflows/issue-translator.yml

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Issue Translator

|

| 2 |

+

on:

|

| 3 |

+

issue_comment:

|

| 4 |

+

types: [created]

|

| 5 |

+

issues:

|

| 6 |

+

types: [opened]

|

| 7 |

+

|

| 8 |

+

jobs:

|

| 9 |

+

build:

|

| 10 |

+

runs-on: ubuntu-latest

|

| 11 |

+

steps:

|

| 12 |

+

- uses: usthe/issues-translate-action@v2.7

|

| 13 |

+

with:

|

| 14 |

+

IS_MODIFY_TITLE: false

|

| 15 |

+

CUSTOM_BOT_NOTE: Bot detected the issue body's language is not English, translate it automatically.

|

.github/workflows/sync.yml

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Upstream Sync

|

| 2 |

+

|

| 3 |

+

permissions:

|

| 4 |

+

contents: write

|

| 5 |

+

|

| 6 |

+

on:

|

| 7 |

+

schedule:

|

| 8 |

+

- cron: "0 4 * * *" # At 04:00, every day

|

| 9 |

+

workflow_dispatch:

|

| 10 |

+

|

| 11 |

+

jobs:

|

| 12 |

+

sync_latest_from_upstream:

|

| 13 |

+

name: Sync latest commits from upstream repo

|

| 14 |

+

runs-on: ubuntu-latest

|

| 15 |

+

if: ${{ github.event.repository.fork }}

|

| 16 |

+

|

| 17 |

+

steps:

|

| 18 |

+

# Step 1: run a standard checkout action

|

| 19 |

+

- name: Checkout target repo

|

| 20 |

+

uses: actions/checkout@v3

|

| 21 |

+

|

| 22 |

+

# Step 2: run the sync action

|

| 23 |

+

- name: Sync upstream changes

|

| 24 |

+

id: sync

|

| 25 |

+

uses: aormsby/Fork-Sync-With-Upstream-action@v3.4

|

| 26 |

+

with:

|

| 27 |

+

upstream_sync_repo: u14app/deep-research

|

| 28 |

+

upstream_sync_branch: main

|

| 29 |

+

target_sync_branch: main

|

| 30 |

+

target_repo_token: ${{ secrets.GITHUB_TOKEN }} # automatically generated, no need to set

|

| 31 |

+

|

| 32 |

+

# Set test_mode true to run tests instead of the true action!!

|

| 33 |

+

test_mode: false

|

| 34 |

+

|

| 35 |

+

- name: Sync check

|

| 36 |

+

if: failure()

|

| 37 |

+

run: |

|

| 38 |

+

echo "[Error] Due to a change in the workflow file of the upstream repository, GitHub has automatically suspended the scheduled automatic update. You need to manually sync your fork."

|

| 39 |

+

exit 1

|

.gitignore

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# See https://help.github.com/articles/ignoring-files/ for more about ignoring files.

|

| 2 |

+

|

| 3 |

+

# dependencies

|

| 4 |

+

/node_modules

|

| 5 |

+

/.pnp

|

| 6 |

+

.pnp.*

|

| 7 |

+

.yarn/*

|

| 8 |

+

!.yarn/patches

|

| 9 |

+

!.yarn/plugins

|

| 10 |

+

!.yarn/releases

|

| 11 |

+

!.yarn/versions

|

| 12 |

+

|

| 13 |

+

# testing

|

| 14 |

+

/coverage

|

| 15 |

+

|

| 16 |

+

# next.js

|

| 17 |

+

/.next/

|

| 18 |

+

/out/

|

| 19 |

+

|

| 20 |

+

# production

|

| 21 |

+

/build

|

| 22 |

+

|

| 23 |

+

# misc

|

| 24 |

+

.DS_Store

|

| 25 |

+

*.pem

|

| 26 |

+

|

| 27 |

+

# debug

|

| 28 |

+

npm-debug.log*

|

| 29 |

+

yarn-debug.log*

|

| 30 |

+

yarn-error.log*

|

| 31 |

+

.pnpm-debug.log*

|

| 32 |

+

|

| 33 |

+

# env files (can opt-in for committing if needed)

|

| 34 |

+

.env*

|

| 35 |

+

|

| 36 |

+

# vercel

|

| 37 |

+

.vercel

|

| 38 |

+

|

| 39 |

+

# typescript

|

| 40 |

+

*.tsbuildinfo

|

| 41 |

+

next-env.d.ts

|

| 42 |

+

|

| 43 |

+

# Serwist

|

| 44 |

+

public/sw*

|

| 45 |

+

public/swe-worker*

|

.node-version

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

18.18.0

|

.vscode/settings.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"css.lint.unknownAtRules": "ignore",

|

| 3 |

+

"i18n-ally.localesPaths": ["src/locales"],

|

| 4 |

+

"i18n-ally.keystyle": "nested",

|

| 5 |

+

"i18n-ally.displayLanguage": "en-US",

|

| 6 |

+

"i18n-ally.sourceLanguage": "en-US"

|

| 7 |

+

}

|

Dockerfile

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM node:18-alpine AS base

|

| 2 |

+

|

| 3 |

+

# Install dependencies only when needed

|

| 4 |

+

FROM base AS deps

|

| 5 |

+

# Check https://github.com/nodejs/docker-node/tree/b4117f9333da4138b03a546ec926ef50a31506c3#nodealpine to understand why libc6-compat might be needed.

|

| 6 |

+

RUN apk add --no-cache libc6-compat

|

| 7 |

+

|

| 8 |

+

WORKDIR /app

|

| 9 |

+

|

| 10 |

+

# Install dependencies based on the preferred package manager

|

| 11 |

+

COPY package.json pnpm-lock.yaml ./

|

| 12 |

+

RUN yarn global add pnpm && pnpm install --frozen-lockfile

|

| 13 |

+

|

| 14 |

+

# Rebuild the source code only when needed

|

| 15 |

+

FROM base AS builder

|

| 16 |

+

WORKDIR /app

|

| 17 |

+

COPY --from=deps /app/node_modules ./node_modules

|

| 18 |

+

COPY . .

|

| 19 |

+

|

| 20 |

+

# Next.js collects completely anonymous telemetry data about general usage.

|

| 21 |

+

# Learn more here: https://nextjs.org/telemetry

|

| 22 |

+

# Uncomment the following line in case you want to disable telemetry during the build.

|

| 23 |

+

# ENV NEXT_TELEMETRY_DISABLED 1

|

| 24 |

+

|

| 25 |

+

RUN yarn run build:standalone

|

| 26 |

+

|

| 27 |

+

# Production image, copy all the files and run next

|

| 28 |

+

FROM base AS runner

|

| 29 |

+

WORKDIR /app

|

| 30 |

+

|

| 31 |

+

ENV NODE_ENV=production

|

| 32 |

+

ENV NEXT_PUBLIC_BUILD_MODE=standalone

|

| 33 |

+

|

| 34 |

+

# Automatically leverage output traces to reduce image size

|

| 35 |

+

# https://nextjs.org/docs/advanced-features/output-file-tracing

|

| 36 |

+

COPY --from=builder /app/.next/standalone ./

|

| 37 |

+

COPY --from=builder /app/.next/static ./.next/static

|

| 38 |

+

COPY --from=builder /app/public ./public

|

| 39 |

+

|

| 40 |

+

EXPOSE 3000

|

| 41 |

+

|

| 42 |

+

# server.js is created by next build from the standalone output

|

| 43 |

+

# https://nextjs.org/docs/pages/api-reference/next-config-js/output

|

| 44 |

+

CMD ["node", "server.js"]

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 u14app

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,459 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

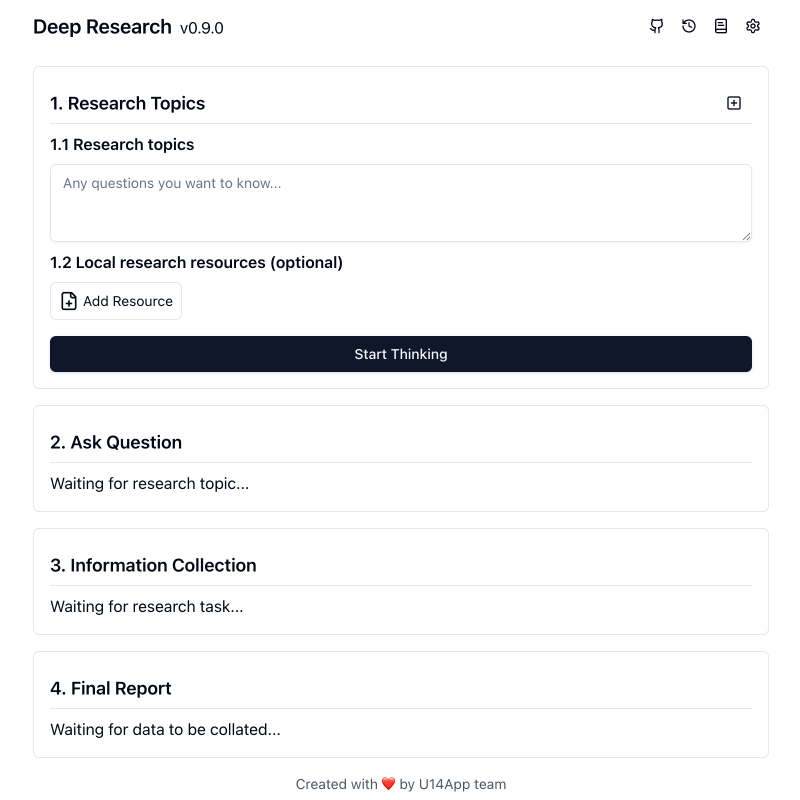

<div align="center">

|

| 2 |

+

<h1>Deep Research</h1>

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

[](https://opensource.org/licenses/MIT)

|

| 9 |

+

|

| 10 |

+

[](https://ai.google.dev/)

|

| 11 |

+

[](https://nextjs.org/)

|

| 12 |

+

[](https://tailwindcss.com/)

|

| 13 |

+

[](https://ui.shadcn.com/)

|

| 14 |

+

|

| 15 |

+

[](https://vercel.com/new/clone?repository-url=https%3A%2F%2Fgithub.com%2Fu14app%2Fdeep-research&project-name=deep-research&repository-name=deep-research)

|

| 16 |

+

[](./docs/How-to-deploy-to-Cloudflare-Pages.md)

|

| 17 |

+

[](https://research.u14.app/)

|

| 18 |

+

|

| 19 |

+

</div>

|

| 20 |

+

|

| 21 |

+

**Lightning-Fast Deep Research Report**

|

| 22 |

+

|

| 23 |

+

Deep Research uses a variety of powerful AI models to generate in-depth research reports in just a few minutes. It leverages advanced "Thinking" and "Task" models, combined with an internet connection, to provide fast and insightful analysis on a variety of topics. **Your privacy is paramount - all data is processed and stored locally.**

|

| 24 |

+

|

| 25 |

+

## ✨ Features

|

| 26 |

+

|

| 27 |

+

- **Rapid Deep Research:** Generates comprehensive research reports in about 2 minutes, significantly accelerating your research process.

|

| 28 |

+

- **Multi-platform Support:** Supports rapid deployment to Vercel, Cloudflare and other platforms.

|

| 29 |

+

- **Powered by AI:** Utilizes the advanced AI models for accurate and insightful analysis.

|

| 30 |

+

- **Privacy-Focused:** Your data remains private and secure, as all data is stored locally on your browser.

|

| 31 |

+

- **Support for Multi-LLM:** Supports a variety of mainstream large language models, including Gemini, OpenAI, Anthropic, Deepseek, Grok, Mistral, Azure OpenAI, any OpenAI Compatible LLMs, OpenRouter, Ollama, etc.

|

| 32 |

+

- **Support Web Search:** Supports search engines such as Searxng, Tavily, Firecrawl, Exa, Bocha, etc., allowing LLMs that do not support search to use the web search function more conveniently.

|

| 33 |

+

- **Thinking & Task Models:** Employs sophisticated "Thinking" and "Task" models to balance depth and speed, ensuring high-quality results quickly. Support switching research models.

|

| 34 |

+

- **Support Further Research:** You can refine or adjust the research content at any stage of the project and support re-research from that stage.

|

| 35 |

+

- **Local Knowledge Base:** Supports uploading and processing text, Office, PDF and other resource files to generate local knowledge base.

|

| 36 |

+

- **Artifact:** Supports editing of research content, with two editing modes: WYSIWYM and Markdown. It is possible to adjust the reading level, article length and full text translation.

|

| 37 |

+

- **Knowledge Graph:** It supports one-click generation of knowledge graph, allowing you to have a systematic understanding of the report content.

|

| 38 |

+

- **Research History:** Support preservation of research history, you can review previous research results at any time and conduct in-depth research again.

|

| 39 |

+

- **Local & Server API Support:** Offers flexibility with both local and server-side API calling options to suit your needs.

|

| 40 |

+

- **Support for SaaS and MCP:** You can use this project as a deep research service (SaaS) through the SSE API, or use it in other AI services through MCP service.

|

| 41 |

+

- **Support PWA:** With Progressive Web App (PWA) technology, you can use the project like a software.

|

| 42 |

+

- **Support Multi-Key payload:** Support Multi-Key payload to improve API response efficiency.

|

| 43 |

+

- **Multi-language Support**: English, 简体中文, Español.

|

| 44 |

+

- **Built with Modern Technologies:** Developed using Next.js 15 and Shadcn UI, ensuring a modern, performant, and visually appealing user experience.

|

| 45 |

+

- **MIT Licensed:** Open-source and freely available for personal and commercial use under the MIT License.

|

| 46 |

+

|

| 47 |

+

## 🎯 Roadmap

|

| 48 |

+

|

| 49 |

+

- [x] Support preservation of research history

|

| 50 |

+

- [x] Support editing final report and search results

|

| 51 |

+

- [x] Support for other LLM models

|

| 52 |

+

- [x] Support file upload and local knowledge base

|

| 53 |

+

- [x] Support SSE API and MCP server

|

| 54 |

+

|

| 55 |

+

## 🚀 Getting Started

|

| 56 |

+

|

| 57 |

+

### Use Free Gemini (recommend)

|

| 58 |

+

|

| 59 |

+

1. Get [Gemini API Key](https://aistudio.google.com/app/apikey)

|

| 60 |

+

2. One-click deployment of the project, you can choose to deploy to Vercel or Cloudflare

|

| 61 |

+

|

| 62 |

+

[](https://vercel.com/new/clone?repository-url=https%3A%2F%2Fgithub.com%2Fu14app%2Fdeep-research&project-name=deep-research&repository-name=deep-research)

|

| 63 |

+

|

| 64 |

+

Currently the project supports deployment to Cloudflare, but you need to follow [How to deploy to Cloudflare Pages](./docs/How-to-deploy-to-Cloudflare-Pages.md) to do it.

|

| 65 |

+

|

| 66 |

+

3. Start using

|

| 67 |

+

|

| 68 |

+

### Use Other LLM

|

| 69 |

+

|

| 70 |

+

1. Deploy the project to Vercel or Cloudflare

|

| 71 |

+

2. Set the LLM API key

|

| 72 |

+

3. Set the LLM API base URL (optional)

|

| 73 |

+

4. Start using

|

| 74 |

+

|

| 75 |

+

## ⌨️ Development

|

| 76 |

+

|

| 77 |

+

Follow these steps to get Deep Research up and running on your local browser.

|

| 78 |

+

|

| 79 |

+

### Prerequisites

|

| 80 |

+

|

| 81 |

+

- [Node.js](https://nodejs.org/) (version 18.18.0 or later recommended)

|

| 82 |

+

- [pnpm](https://pnpm.io/) or [npm](https://www.npmjs.com/) or [yarn](https://yarnpkg.com/)

|

| 83 |

+

|

| 84 |

+

### Installation

|

| 85 |

+

|

| 86 |

+

1. **Clone the repository:**

|

| 87 |

+

|

| 88 |

+

```bash

|

| 89 |

+

git clone https://github.com/u14app/deep-research.git

|

| 90 |

+

cd deep-research

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

2. **Install dependencies:**

|

| 94 |

+

|

| 95 |

+

```bash

|

| 96 |

+

pnpm install # or npm install or yarn install

|

| 97 |

+

```

|

| 98 |

+

|

| 99 |

+

3. **Set up Environment Variables:**

|

| 100 |

+

|

| 101 |

+

You need to modify the file `env.tpl` to `.env`, or create a `.env` file and write the variables to this file.

|

| 102 |

+

|

| 103 |

+

```bash

|

| 104 |

+

# For Development

|

| 105 |

+

cp env.tpl .env.local

|

| 106 |

+

# For Production

|

| 107 |

+

cp env.tpl .env

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

4. **Run the development server:**

|

| 111 |

+

|

| 112 |

+

```bash

|

| 113 |

+

pnpm dev # or npm run dev or yarn dev

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

Open your browser and visit [http://localhost:3000](http://localhost:3000) to access Deep Research.

|

| 117 |

+

|

| 118 |

+

### Custom Model List

|

| 119 |

+

|

| 120 |

+

The project allow custom model list, but **only works in proxy mode**. Please add an environment variable named `NEXT_PUBLIC_MODEL_LIST` in the `.env` file or environment variables page.

|

| 121 |

+

|

| 122 |

+

Custom model lists use `,` to separate multiple models. If you want to disable a model, use the `-` symbol followed by the model name, i.e. `-existing-model-name`. To only allow the specified model to be available, use `-all,+new-model-name`.

|

| 123 |

+

|

| 124 |

+

## 🚢 Deployment

|

| 125 |

+

|

| 126 |

+

### Vercel

|

| 127 |

+

|

| 128 |

+

[](https://vercel.com/new/clone?repository-url=https%3A%2F%2Fgithub.com%2Fu14app%2Fdeep-research&project-name=deep-research&repository-name=deep-research)

|

| 129 |

+

|

| 130 |

+

### Cloudflare

|

| 131 |

+

|

| 132 |

+

Currently the project supports deployment to Cloudflare, but you need to follow [How to deploy to Cloudflare Pages](./docs/How-to-deploy-to-Cloudflare-Pages.md) to do it.

|

| 133 |

+

|

| 134 |

+

### Docker

|

| 135 |

+

|

| 136 |

+

> The Docker version needs to be 20 or above, otherwise it will prompt that the image cannot be found.

|

| 137 |

+

|

| 138 |

+

> ⚠️ Note: Most of the time, the docker version will lag behind the latest version by 1 to 2 days, so the "update exists" prompt will continue to appear after deployment, which is normal.

|

| 139 |

+

|

| 140 |

+

```bash

|

| 141 |

+

docker pull xiangfa/deep-research:latest

|

| 142 |

+

docker run -d --name deep-research -p 3333:3000 xiangfa/deep-research

|

| 143 |

+

```

|

| 144 |

+

|

| 145 |

+

You can also specify additional environment variables:

|

| 146 |

+

|

| 147 |

+

```bash

|

| 148 |

+

docker run -d --name deep-research \

|

| 149 |

+

-p 3333:3000 \

|

| 150 |

+

-e ACCESS_PASSWORD=your-password \

|

| 151 |

+

-e GOOGLE_GENERATIVE_AI_API_KEY=AIzaSy... \

|

| 152 |

+

xiangfa/deep-research

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

+

or build your own docker image:

|

| 156 |

+

|

| 157 |

+

```bash

|

| 158 |

+

docker build -t deep-research .

|

| 159 |

+

docker run -d --name deep-research -p 3333:3000 deep-research

|

| 160 |

+

```

|

| 161 |

+

|

| 162 |

+

If you need to specify other environment variables, please add `-e key=value` to the above command to specify it.

|

| 163 |

+

|

| 164 |

+

Deploy using `docker-compose.yml`:

|

| 165 |

+

|

| 166 |

+

```bash

|

| 167 |

+

version: '3.9'

|

| 168 |

+

services:

|

| 169 |

+

deep-research:

|

| 170 |

+

image: xiangfa/deep-research

|

| 171 |

+

container_name: deep-research

|

| 172 |

+

environment:

|

| 173 |

+

- ACCESS_PASSWORD=your-password

|

| 174 |

+

- GOOGLE_GENERATIVE_AI_API_KEY=AIzaSy...

|

| 175 |

+

ports:

|

| 176 |

+

- 3333:3000

|

| 177 |

+

```

|

| 178 |

+

|

| 179 |

+

or build your own docker compose:

|

| 180 |

+

|

| 181 |

+

```bash

|

| 182 |

+

docker compose -f docker-compose.yml build

|

| 183 |

+

```

|

| 184 |

+

|

| 185 |

+

### Static Deployment

|

| 186 |

+

|

| 187 |

+

You can also build a static page version directly, and then upload all files in the `out` directory to any website service that supports static pages, such as Github Page, Cloudflare, Vercel, etc..

|

| 188 |

+

|

| 189 |

+

```bash

|

| 190 |

+

pnpm build:export

|

| 191 |

+

```

|

| 192 |

+

|

| 193 |

+

## ⚙️ Configuration

|

| 194 |

+

|

| 195 |

+

As mentioned in the "Getting Started" section, Deep Research utilizes the following environment variables for server-side API configurations:

|

| 196 |

+

|

| 197 |

+

Please refer to the file [env.tpl](./env.tpl) for all available environment variables.

|

| 198 |

+

|

| 199 |

+

**Important Notes on Environment Variables:**

|

| 200 |

+

|

| 201 |

+

- **Privacy Reminder:** These environment variables are primarily used for **server-side API calls**. When using the **local API mode**, no API keys or server-side configurations are needed, further enhancing your privacy.

|

| 202 |

+

|

| 203 |

+

- **Multi-key Support:** Supports multiple keys, each key is separated by `,`, i.e. `key1,key2,key3`.

|

| 204 |

+

|

| 205 |

+

- **Security Setting:** By setting `ACCESS_PASSWORD`, you can better protect the security of the server API.

|

| 206 |

+

|

| 207 |

+

- **Make variables effective:** After adding or modifying this environment variable, please redeploy the project for the changes to take effect.

|

| 208 |

+

|

| 209 |

+

## 📄 API documentation

|

| 210 |

+

|

| 211 |

+

Currently the project supports two forms of API: Server-Sent Events (SSE) and Model Context Protocol (MCP).

|

| 212 |

+

|

| 213 |

+

### Server-Sent Events API

|

| 214 |

+

|

| 215 |

+

The Deep Research API provides a real-time interface for initiating and monitoring complex research tasks.

|

| 216 |

+

|

| 217 |

+

Recommended to use the API via `@microsoft/fetch-event-source`, to get the final report, you need to listen to the `message` event, the data will be returned in the form of a text stream.

|

| 218 |

+

|

| 219 |

+

#### POST method

|

| 220 |

+

|

| 221 |

+

Endpoint: `/api/sse`

|

| 222 |

+

|

| 223 |

+

Method: `POST`

|

| 224 |

+

|

| 225 |

+

Body:

|

| 226 |

+

|

| 227 |

+

```typescript

|

| 228 |

+

interface SSEConfig {

|

| 229 |

+

// Research topic

|

| 230 |

+

query: string;

|

| 231 |

+

// AI provider, Possible values include: google, openai, anthropic, deepseek, xai, mistral, azure, openrouter, openaicompatible, pollinations, ollama

|

| 232 |

+

provider: string;

|

| 233 |

+

// Thinking model id

|

| 234 |

+

thinkingModel: string;

|

| 235 |

+

// Task model id

|

| 236 |

+

taskModel: string;

|

| 237 |

+

// Search provider, Possible values include: model, tavily, firecrawl, exa, bocha, searxng

|

| 238 |

+

searchProvider: string;

|

| 239 |

+

// Response Language, also affects the search language. (optional)

|

| 240 |

+

language?: string;

|

| 241 |

+

// Maximum number of search results. Default, `5` (optional)

|

| 242 |

+

maxResult?: number;

|

| 243 |

+

// Whether to include content-related images in the final report. Default, `true`. (optional)

|

| 244 |

+

enableCitationImage?: boolean;

|

| 245 |

+

// Whether to include citation links in search results and final reports. Default, `true`. (optional)

|

| 246 |

+

enableReferences?: boolean;

|

| 247 |

+

}

|

| 248 |

+

```

|

| 249 |

+

|

| 250 |

+

Headers:

|

| 251 |

+

|

| 252 |

+

```typescript

|

| 253 |

+

interface Headers {

|

| 254 |

+

"Content-Type": "application/json";

|

| 255 |

+

// If you set an access password

|

| 256 |

+

// Authorization: "Bearer YOUR_ACCESS_PASSWORD";

|

| 257 |

+

}

|

| 258 |

+

```

|

| 259 |

+

|

| 260 |

+

See the detailed [API documentation](./docs/deep-research-api-doc.md).

|

| 261 |

+

|

| 262 |

+

#### GET method

|

| 263 |

+

|

| 264 |

+

This is an interesting implementation. You can watch the whole process of deep research directly through the URL just like watching a video.

|

| 265 |

+

|